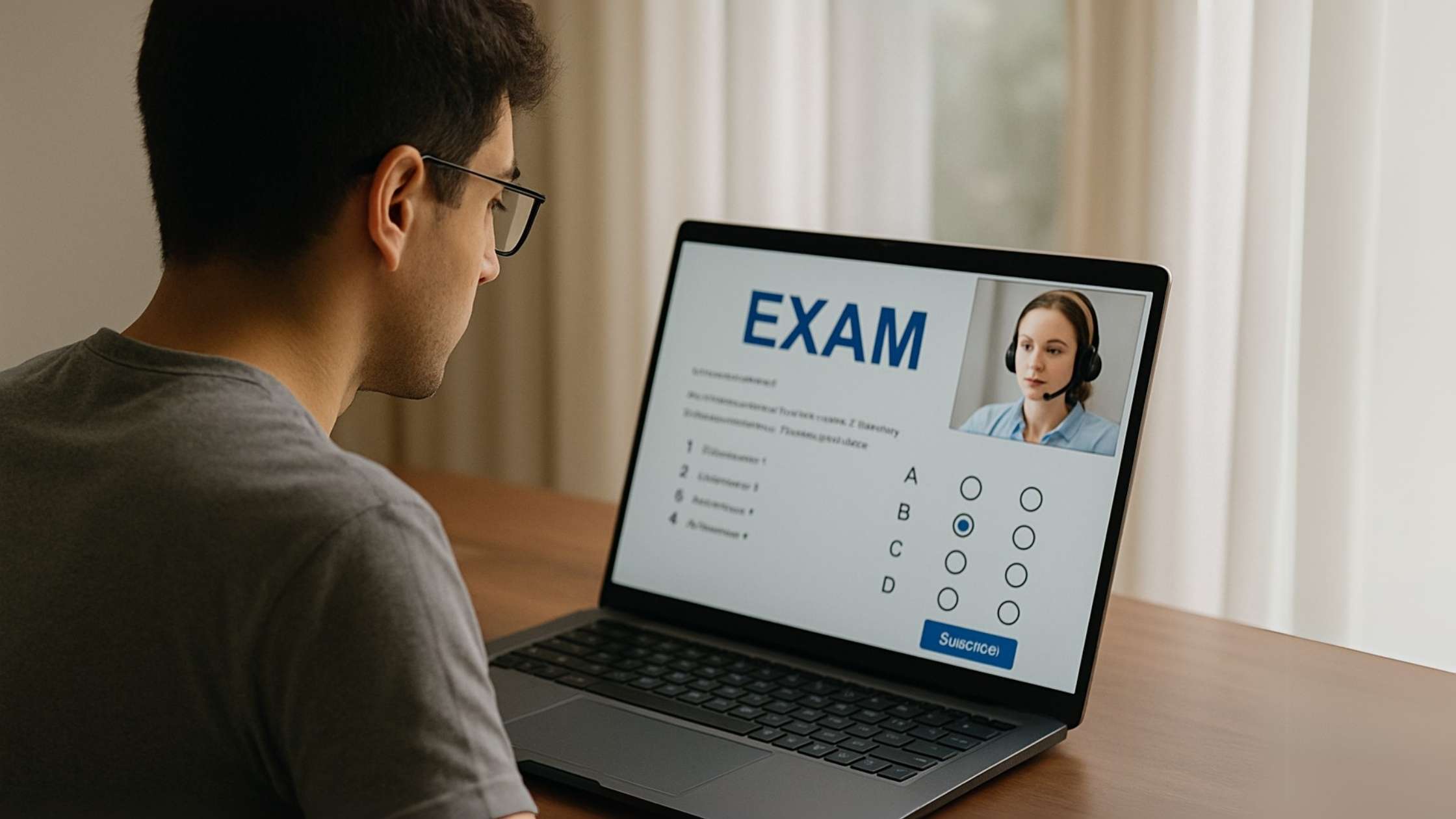

When you hear the term language test, you probably think of sitting in a quiet room with headphones on, answering questions about grammar or pronunciation. But the modern reality is a bit different. In today's world, many of these assessments are taken online, from home, on personal laptops, away from physical test centers. Sounds convenient, right? It is. But it also opens up a whole new world of security threats.

From impersonation to using ChatGPT, Perplexity, Claude, or Meta AI Glasses for real-time answer help, the sophistication of AI cheating methods is growing just as fast as the tech meant to prevent them. And that’s why language testing providers, especially those administering exams like TOEFL, IELTS, and PTE, are stepping up their game.

The Rise of AI Cheating and Digital Exam Threats

Language exams like TOEFL (Test of English as a Foreign Language), IELTS (International English Language Testing System), and PTE are some of the most widely accepted assessments for academic admissions, work visas, and immigration. These high-stakes exams are increasingly offered online, but so are the ways to cheat in them.

AI tools, screen-sharing apps, browser extensions, and smart devices have given test-takers unfair advantages. It’s not uncommon to hear of people using Large Language Models (LLMs) in the background to fetch answers or employing impersonators who can navigate exams on their behalf. That’s not just cheating, it’s a direct threat to the legitimacy of public certifications, especially in language testing where the results often tie to visa, job, or academic requirements.

Building a Fortress: Multi-Layered Security Frameworks

So, how are test providers keeping up? The answer is a layered defense strategy that looks like something out of a spy movie—but it’s genuine.

AI Proctoring Agents

AI Proctoring Agents are at the heart of modern test security. These systems don’t sleep, don’t blink, and don’t miss much. AI agent are virtual invigilators; it watches the test-taker’s eye movements, detects voice activity, flags multiple faces in the camera, and keeps an eye on browser activity. All in real time.

Talview’s AI Proctoring Agent, Alvy, offers an intelligent layer that tracks and logs suspicious behavior, alerting live proctors or flagging incidents for review later. It's easily scalable, making it perfect for securing a mass language test.

Role of the Secondary Camera in Detecting Cheating

Here’s where things get even more interesting. A primary webcam can only show the test-taker’s face. But what if they’re looking at answers on another screen, or receiving silent cues from someone else in the room? That’s where the secondary camera comes in.

By capturing a wide-angle or over-the-shoulder view, this camera gives AI proctoring agents a complete view of the test environment, reducing blind spots and tightening the exam room perimeter.

Biometric and Multi-Factor Authentication

Before even starting the test, candidates must prove who they are. Providers now combine face recognition, ID verification, and sometimes even behavioral biometrics to verify identity. Add a layer of two-factor or multi-factor authentication, and it becomes nearly impossible for impersonators to sneak in.

Cloud-Level Security and Data Integrity

Behind the user interface of every exam platform is a cloud architecture that needs just as much protection.

Modern exam platforms are increasingly shifting to Infrastructure as a Service (IaaS) models because they offer scalability and built-in security tools. These platforms implement strict encryption protocols to secure test data and user information.

We’re also seeing widespread adoption of SQL injection prevention, anti-tampering systems, and role-based access management, ensuring that data doesn’t just remain confidential but also unaltered.

Role of AI Agents and LLMs in Proactive Cheating Detection

Detecting cheating isn’t just about catching someone red-handed anymore. It’s about predicting intent, identifying patterns, and stopping fraud before it happens.

How AI Agents Predict and Flag Suspicious Behavior

Using machine learning, Talview’s Alvy AI agent now analyzes keystroke dynamics, mouse movements, and even pause patterns. If something feels off, like a student taking abnormally long pauses before each question, it gets flagged.

Some systems also look at IP patterns, device switching, and even audio cues to detect if someone’s being fed answers.

Leveraging NLP for Log Analysis and Threat Prediction

Modern AI security isn’t just about watching through a webcam, it's also about reading between the digital lines. Natural Language Processing (NLP) has emerged as a game-changer for threat detection. These systems sift through mountains of exam data and activity logs to pick up on unusual behaviors. For instance, if a test-taker repeatedly uses similar phrasing or displays answer patterns that resemble AI-generated text, it raises a red flag.

Pair that with a deep learning mechanism like Convolutional Neural Networks (CNNs), and the system gets even sharper. Advanced trained CNNs can analyze visual data and spot inconsistencies in the test environment, like subtle background changes, unregistered faces, or suspicious objects.

All of this happens behind the scenes, in real-time. The goal is to level the playing field so that honest candidates aren’t overshadowed by tech-savvy cheaters.

The Power of Adaptive Testing and Large Item Banks

Security doesn’t stop with monitoring. Smart test design also plays a huge role in maintaining integrity.

Making It Harder to Share Answers

Language testing platforms are now using adaptive testing, which adjusts question difficulty based on responses. So even if two candidates sit side by side, their tests will look completely different.

Rotating Questions and Estimating Difficulty Levels

Having thousands of question variations (thanks to large item banks) means there’s no single version of a test to memorize or leak. And with difficulty estimations powered by AI, test creators can ensure fairness without compromising security.

The Human Touch: Combining AI With Human Oversight

Let’s not forget the human side. Even the best AI needs human checks. That’s why most systems blend automated proctoring with live or record-and-review human proctors.

This hybrid model allows for greater accuracy in identifying false positives or understanding context for example, a sudden movement might not be cheating, just someone stretching.

Conclusion

The rise of AI cheating and digital exam fraud is no joke, especially in the realm of language testing, where the consequences of dishonest results can ripple into immigration, job placement, and academic credibility. But thankfully, we’re not defenseless.

Talview’s secure testing tools, like Alvy, AI proctoring agent, secondary cameras, 360-degree environment checks, and cloud-level security framework,s are not just tech buzzwords. They’re the guardians of exam integrity in our modern world. As long as providers stay ahead of the curve with smarter tools and more robust security layers, test-takers can rest assured that the playing field remains fair and that honesty still matters.

-1.png?width=1644&height=286&name=image%20(16)-1.png)

Leave a Reply